If you found your way here and are reading this, then it is probably safe to assume you know about Tomasz Stobierski’s Relief Terrain Pack v3.2 and the issues its parallax effects have since VR support in Unity became native. In that case you probably want to skip the next section.

The Problem: Two Cameras vs. One

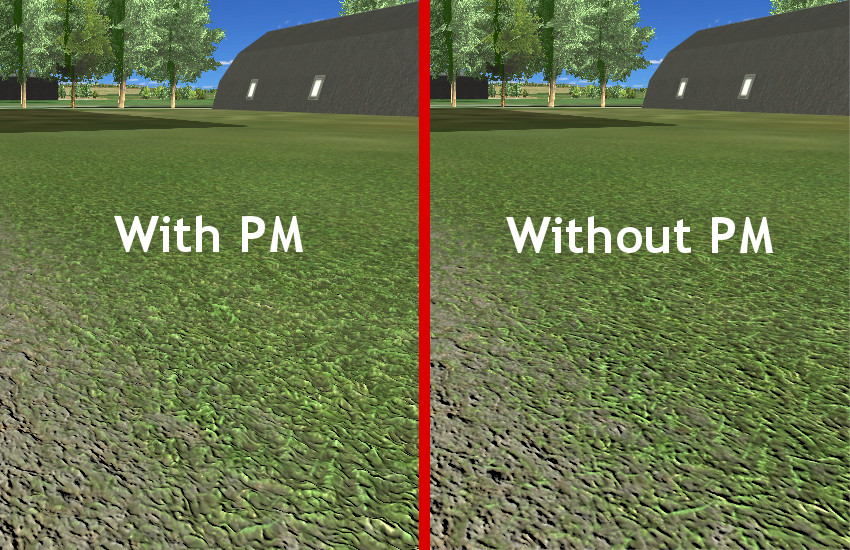

For all others, who just joined: the RTP pack is one of the most popular assets for Unity developers, known for outstanding rendering of terrains. Two of those features are parallax mapping (PM) and its slightly more sophisticated brother parallax occlusion mapping (POM). In a nutshell, they are neat little tricks to add a lot of 3-dimensional detail to surfaces without actually increasing the complexity of the meshes involved, hence they come comparably cheap in terms of processing power required.

As long as the only VR support in Unity was Oculus’ plugin package for the Rift, everything was fine: the package automatically created two cameras, positioned them like your eyes would be positioned in the virtual world, and had each camera render the output for either side of the Rift’s display. While this worked very well of course, it was not exactly fine for everybody, especially people with older hardware, because pretty much all expensive calculations had to be done twice per frame.

So Unity went ahead and added native VR support, which greatly improved performance, because it would now only use a single camera. Of course everything is still rendered from two different perspectives, but a lot of other expensive per-frame tasks can be shared that way. Unfortunately, at the time of writing, Unity does not tell a shader the actual eye position that is currently rendering, but only the position of the camera’s GameObject (in the middle between both eyes). So long story short: while you would still see perfectly working parallax mapping watching somebody’s VR experience on a computer monitor, the person wearing the headset would only observe a flat surface with a strangely morphing texture.

Unity kind of reacted to this problem, and implemented the optional use of the old system, using two cameras again. While this of course works, for reasons above it has a pretty severe impact on framerate.

The Solution (read: Hack)

So here is my way how to fix this and get VR parallax mapping back without killing performance. The actual idea came from forum user davidlively, who suggested the overall method used.

First, we need a little helper, that will push information about the currently rendering eye’s position where the shaders can pick it up.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

using UnityEngine; using System.Collections; using UnityEngine.VR; public class ShaderEyeOffset : MonoBehaviour { private Transform parent; // This is the parent game object to your camera. private bool isLeftEye = false; // This assumes the left eye is rendered first, which I found to be always true in Unity. public void Awake() { parent = this.transform.parent; } // Note: This is actually what I learned from davidlively: OnPreRender() is called twice per frame, once for each eye! public void OnPreRender() { isLeftEye = !isLeftEye; // This assumes no eye is ever skipped, which also always worked so far. if (!VRDevice.isPresent) { Shader.SetGlobalVector("_GlobalEyeOffset", Vector4.zero); // no offset for 2D rendering } else { Vector3 worldEyePos = parent.TransformVector(InputTracking.GetLocalPosition(isLeftEye ? VRNode.LeftEye : VRNode.RightEye)); // relative to the parent! Shader.SetGlobalVector("_GlobalEyeOffset", new Vector4(worldEyePos.x, worldEyePos.z, worldEyePos.y, 0f)); // Note that Y and Z are switched for shader space! } } } |

Basically for each eye we are storing the offset its rendering position has from the “center eye”. Note the use of the parent’s transform: this is the GameObject that we use to manipulate our camera’s position. If your setup differs, this script will probably not work correctly. Add the script two all your cameras (not their parents) that will render terrain.

Next we will modify RTP’s parallax shaders (I will assume you can figure out where to put things, so I skip unchanged code with /* ... */):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 |

/* ... */ uniform float3 _GlobalEyeOffset; // add this /* ... */ _WorldSpaceCameraPos.xyz += _GlobalEyeOffset.xyz; // add this right at the beginning of the "void vert(...)" function /* ... */ // float3 dirTRI=IN.viewDir.xyz; // change this ... float3 dirTRI=IN.viewDir.xyz - _GlobalEyeOffset.xyz; // ... to this /* ... */ #if defined(RTP_SNW_CHOOSEN_LAYER_NORM_0) // float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.x, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz) ,0,0); // change this ... float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.x, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz - _GlobalEyeOffset.xyz) ,0,0); // ... to this #endif #if defined(RTP_SNW_CHOOSEN_LAYER_NORM_1) // float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.y, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz) ,0,0); // change this ... float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.y, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz - _GlobalEyeOffset.xyz) ,0,0); // ... to this #endif #if defined(RTP_SNW_CHOOSEN_LAYER_NORM_2) // float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.z, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz) ,0,0); // change this ... float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.z, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz - _GlobalEyeOffset.xyz) ,0,0); // ... to this #endif #if defined(RTP_SNW_CHOOSEN_LAYER_NORM_3) // float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.w, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz) ,0,0); // change this ... float4 SNOW_PM_OFFSET=float4( ParallaxOffset(tHA.w, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz - _GlobalEyeOffset.xyz) ,0,0); // ... to this #endif /* ... */ float3 EyeDirTan = -IN.viewDir.xyz; // change this ... float3 EyeDirTan = -IN.viewDir.xyz -_GlobalEyeOffset; // ... to this float slopeF=1-IN.viewDir.z; /* ... */ #if defined(RTP_PM_SHADING) && !defined(UNITY_PASS_SHADOWCASTER) // rayPos.xy += ParallaxOffset(dot(splat_control1, tHA)+dot(splat_control2, tHB), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... rayPos.xy += ParallaxOffset(dot(splat_control1, tHA)+dot(splat_control2, tHB), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this /* ... */ #if defined(RTP_PM_SHADING) && !defined(UNITY_PASS_SHADOWCASTER) // rayPos.xy += ParallaxOffset(hOffset, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... rayPos.xy += ParallaxOffset(hOffset, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this /* ... */ #if defined(RTP_PM_SHADING) && !defined(UNITY_PASS_SHADOWCASTER) // rayPos.xy += ParallaxOffset(dot(splat_control1, tHA), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... rayPos.xy += ParallaxOffset(dot(splat_control1, tHA), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this /* ... */ // float3 dir=IN.viewDir.xyz; // change this .. float3 dir=IN.viewDir.xyz - _GlobalEyeOffset.xyz; // ... to this (do this 3 times!!) /* ... */ // uvTRItmp.xy += ParallaxOffset(hgtXY, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... uvTRItmp.xy += ParallaxOffset(hgtXY, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this /* ... */ // rayPos.xy += ParallaxOffset(hOffset, _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... rayPos.xy += ParallaxOffset(dot(splat_control2, tHB), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this // rayPos.xy += ParallaxOffset(dot(splat_control2, tHB), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz); // change this ... rayPos.xy += ParallaxOffset(dot(splat_control2, tHB), _TERRAIN_ExtrudeHeight*_uv_Relief_z*COLOR_DAMP_VAL, IN.viewDir.xyz + _GlobalEyeOffset.xyz); // ... to this |

In principle it is rather simple what we are doing: we always offset the view direction by whatever our script told us to. It is just the complexity of the RTP shaders and the compiler directives, that might be a little intimidating.

If you did things like I described, you should now be able to enjoy RTP’s parallax mapping in all its VR glory again!

Disclaimer

Of course this is just a hack and nothing like a final solution. I am sure Unity will fix it sooner or later, maybe by correction IN.viewDir for eye position in the first place. But then again, this might break so many things, that they need to come up with something else. A while ago they mentioned adding an eye index to the default shader variables, but I have not heard anything about that since then, which is primarily why I am posting my hack now. This would anyways not account for different IPDs, like ShaderEyeOffset.cs does.

So until there is an official way to handle VR situations in shaders, this solution works for me. Please also note, that on the one hand I am not a shader guy at all, and on the other one I only use a subset of RTP’s features in my current project. Thus it is possible, that my changes break something that I am not aware of – so while I hope this might be useful to somebody, you have been warned.

There is also still a problem where objects using the blend mesh feature (with PM or POM are enabled) do not fade seamless into the terrain. Unfortunately I can not provide you with a screenshot, because you can only see it in VR! I also think it is not related to my changes, but then again, I might be wrong.

Also keep in mind, that whenever you update the RTP package you will lose all changes.

I am a freelance IT consultant, software developer and commercial pilot from Vienna, Austria.

I am a freelance IT consultant, software developer and commercial pilot from Vienna, Austria.